Books Feed

How CyberCRX lower ML processing time from 8 days to 56 minutes with AWS Step Features Distributed Map

|

Final December, Sébastien Stormacq wrote concerning the availability of a distributed map state for AWS Step Features, a brand new characteristic that means that you can orchestrate large-scale parallel workloads within the cloud. That’s when Charles Burton, an information techniques engineer for an organization known as CyberGRX, discovered about it and refactored his workflow, decreasing the processing time for his machine studying (ML) processing job from 8 days to 56 minutes. Earlier than, working the job required an engineer to continually monitor it; now, it runs in lower than an hour with no assist wanted. As well as, the brand new implementation with AWS Step Features Distributed Map prices lower than what it did initially.

What CyberGRX achieved with this answer is an ideal instance of what serverless applied sciences embrace: letting the cloud do as a lot of the undifferentiated heavy lifting as doable so the engineers and knowledge scientists have extra time to concentrate on what’s vital for the enterprise. On this case, which means persevering with to enhance the mannequin and the processes for one of many key choices from CyberGRX, a cyber threat evaluation of third events utilizing ML insights from its giant and rising database.

What’s the enterprise problem?

CyberGRX shares third-party cyber threat (TPCRM) knowledge with their clients. They predict, with excessive confidence, how a third-party firm will reply to a threat evaluation questionnaire. To do that, they should run their predictive mannequin on each firm of their platform; they at present have predictive knowledge on greater than 225,000 firms. At any time when there’s a brand new firm or the information modifications for an organization, they regenerate their predictive mannequin by processing their complete dataset. Over time, CyberGRX knowledge scientists enhance the mannequin or add new options to it, which additionally requires the mannequin to be regenerated.

The problem is working this job for 225,000 firms in a well timed method, with as few hands-on assets as doable. The job runs a set of operations for every firm, and each firm calculation is impartial of different firms. Which means that within the very best case, each firm could be processed on the identical time. Nonetheless, implementing such an enormous parallelization is a difficult downside to resolve.

First iteration

With that in thoughts, the corporate constructed their first iteration of the pipeline utilizing Kubernetes and Argo Workflows, an open-source container-native workflow engine for orchestrating parallel jobs on Kubernetes. These have been instruments they have been aware of, as they have been already utilizing them of their infrastructure.

However as quickly as they tried to run the job for all the businesses on the platform, they ran up towards the boundaries of what their system might deal with effectively. As a result of the answer relied on a centralized controller, Argo Workflows, it was not strong, and the controller was scaled to its most capability throughout this time. At the moment, they solely had 150,000 firms. And working the job with the entire firms took round 8 days, throughout which the system would crash and must be restarted. It was very labor intensive, and it at all times required an engineer on name to watch and troubleshoot the job.

The tipping level got here when Charles joined the Analytics staff originally of 2022. Certainly one of his first duties was to do a full mannequin run on roughly 170,000 firms at the moment. The mannequin run lasted the entire week and ended at 2:00 AM on a Sunday. That’s when he determined their system wanted to evolve.

Second iteration

With the ache of the final time he ran the mannequin contemporary in his thoughts, Charles thought by way of how he might rewrite the workflow. His first thought was to make use of AWS Lambda and SQS, however he realized that he wanted an orchestrator in that answer. That’s why he selected Step Features, a serverless service that helps you automate processes, orchestrate microservices, and create knowledge and ML pipelines; plus, it scales as wanted.

Charles acquired the brand new model of the workflow with Step Features working in about 2 weeks. Step one he took was adapting his present Docker picture to run in Lambda utilizing Lambda’s container picture packaging format. As a result of the container already labored for his knowledge processing duties, this replace was easy. He scheduled Lambda provisioned concurrency to be sure that all capabilities he wanted have been prepared when he began the job. He additionally configured reserved concurrency to be sure that Lambda would be capable of deal with this most variety of concurrent executions at a time. In an effort to assist so many capabilities executing on the identical time, he raised the concurrent execution quota for Lambda per account.

And to be sure that the steps have been run in parallel, he used Step Features and the map state. The map state allowed Charles to run a set of workflow steps for every merchandise in a dataset. The iterations run in parallel. As a result of Step Features map state presents 40 concurrent executions and CyberGRX wanted extra parallelization, they created an answer that launched a number of state machines in parallel; on this manner, they have been in a position to iterate quick throughout all the businesses. Creating this advanced answer, required a preprocessor that dealt with the heuristics of the concurrency of the system and cut up the enter knowledge throughout a number of state machines.

This second iteration was already higher than the primary one, as now it was in a position to end the execution with no issues, and it might iterate over 200,000 firms in 90 minutes. Nonetheless, the preprocessor was a really advanced a part of the system, and it was hitting the boundaries of the Lambda and Step Features APIs as a result of quantity of parallelization.

Third and remaining iteration

Then, throughout AWS re:Invent 2022, AWS introduced a distributed map for Step Features, a brand new kind of map state that means that you can write Step Features to coordinate large-scale parallel workloads. Utilizing this new characteristic, you’ll be able to simply iterate over hundreds of thousands of objects saved in Amazon Easy Storage Service (Amazon S3), after which the distributed map can launch as much as 10,000 parallel sub-workflows to course of the information.

When Charles learn within the Information Weblog article concerning the 10,000 parallel workflow executions, he instantly thought of making an attempt this new state. In a few weeks, Charles constructed the brand new iteration of the workflow.

As a result of the distributed map state cut up the enter into totally different processors and dealt with the concurrency of the totally different executions, Charles was in a position to drop the advanced preprocessor code.

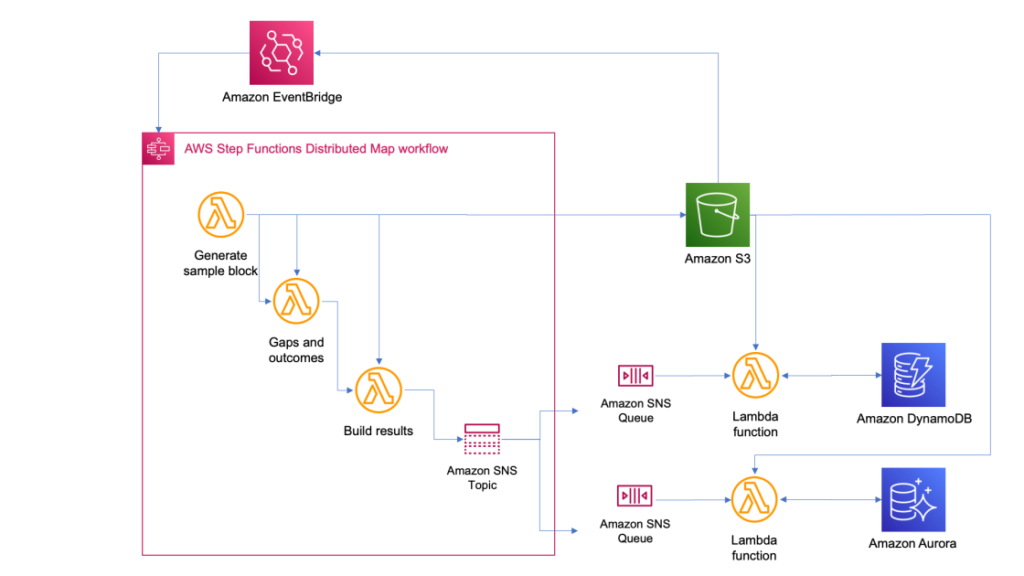

The brand new course of was the only that it’s ever been; now each time they wish to run the job, they simply add a file to Amazon S3 with the enter knowledge. This motion triggers an Amazon EventBridge rule that targets the state machine with the distributed map. The state machine then executes with that file as an enter and publishes the outcomes to an Amazon Easy Notification Service (Amazon SNS) matter.

What was the influence?

A number of weeks after finishing the third iteration, they needed to run the job on all 227,000 firms of their platform. When the job completed, Charles’ staff was blown away; the entire course of took solely 56 minutes to finish. They estimated that in these 56 minutes, the job ran greater than 57 billion calculations.

The next picture exhibits an Amazon CloudWatch graph of the concurrent executions for one Lambda perform throughout the time that the workflow was working. There are nearly 10,000 capabilities working in parallel throughout this time.

Simplifying and shortening the time to run the job opens loads of potentialities for CyberGRX and the information science staff. The advantages began straight away the second one of many knowledge scientists needed to run the job to check some enhancements that they had made for the mannequin. They have been in a position to run it independently with out requiring an engineer to assist them.

And, as a result of the predictive mannequin itself is among the key choices from CyberGRX, the corporate now has a extra aggressive product for the reason that predictive evaluation could be refined each day.

Study extra about utilizing AWS Step Features:

It’s also possible to test the Serverless Workflows Assortment that we now have accessible in Serverless Land so that you can check and be taught extra about this new functionality.

— Marcia